The Future of Climate Modeling — the Kilometer Scale

Thus, human-induced problems, such as those embodied by climate change, pose new challenges to societies and ecosystems. To cope, the best possible information systems are needed; advanced storm-resolving Earth system models built to exploit state-of-the-art information technologies offer opportunities to study the Earth system in new ways. Kilometer-scale models are now able to resolve the transient dynamics of important atmospheric disturbances, from a shower of (deep) cumulus clouds up to a tropical storm leading to some researchers to refer to these as storm-resolving Earth system models. But these models are capable of resolving much more than storms, as they explicitly simulate mesoscale eddies in the ocean and the scales of even the smallest watersheds and biomes. They properly represent the antagonism between the wind and Earth’s topographic relief, and the guiding influence of ocean bathymetry on water masses. For these reasons this new generation of climate models differ qualitatively and structurally from conventional high-resolution climate models (e.g., MPI-ESM). Not only does their heightened physical content open up new scientific frontiers, they also speak to the growing range of applications thirsting for climate information.

Leading the way with ICON

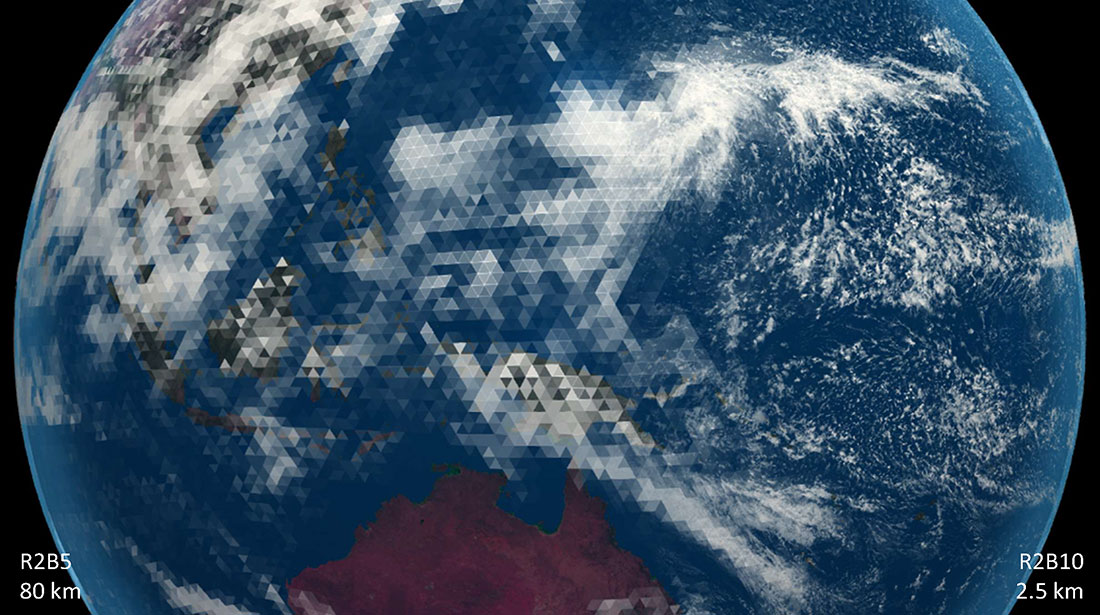

The Max Planck Institute for Meteorology (MPI-M) is leading the development of one of two storm-resolving Earth system models in Europe, a development referred to as ICON-Sapphire. ICON, references the icosahedral (ICO) which provides the first template for its numerical mesh, one designed specifically to optimize computational resources over a sphere. The N in ICON references its use of the non-hydrostatic equations, which have been implemented in partnership with scientists at the German meteorological service (DWD). Sapphire, one of two development threads using ICON, links our understanding of the laws that govern the evolution of the atmosphere at small scales, to large scale changes in Earth’s climate.

In cooperation with computational scientists at the German Climate Computing Center (DKRZ) and ETH Zurich, the ICON code has been optimized to efficiently exploit modern computing infrastructure, such as the high-performance GPU cluster becoming operational on "Levante" at the DKRZ, where GPU stands for Graphics Processing Units with their massively parallel architecture. Even more powerful GPU-based machines coming on line in Europe’s leading high-performance computing centers in Kajaani, Barcelona, Lugano, and Jülich.

ICON originated as a joint project of the MPI-M and the DWD and has expanded to involve more development partners at DKRZ, ETH Zurich, and Karlsruhe Institute of Technology (KIT). It includes component models for the atmosphere, the ocean, the land, as well as chemical and biogeochemical cycles all implemented on the basis of common data structures and sharing the same efficient technical infrastructure. As a prelude to the present ‘Sapphire’ development, these components were coupled together in a project code-named ‘Ruby’, which aimed to first replicate the functionality of existing Earth system models, itself "a challenging endeavor that required numerous sensitivity experiments conducted by a dedicated team of scientists and programmers from all departments of the MPI-M and the partner institutions," says Johann Jungclaus, scientist and group leader at the MPI-M, who led this effort.

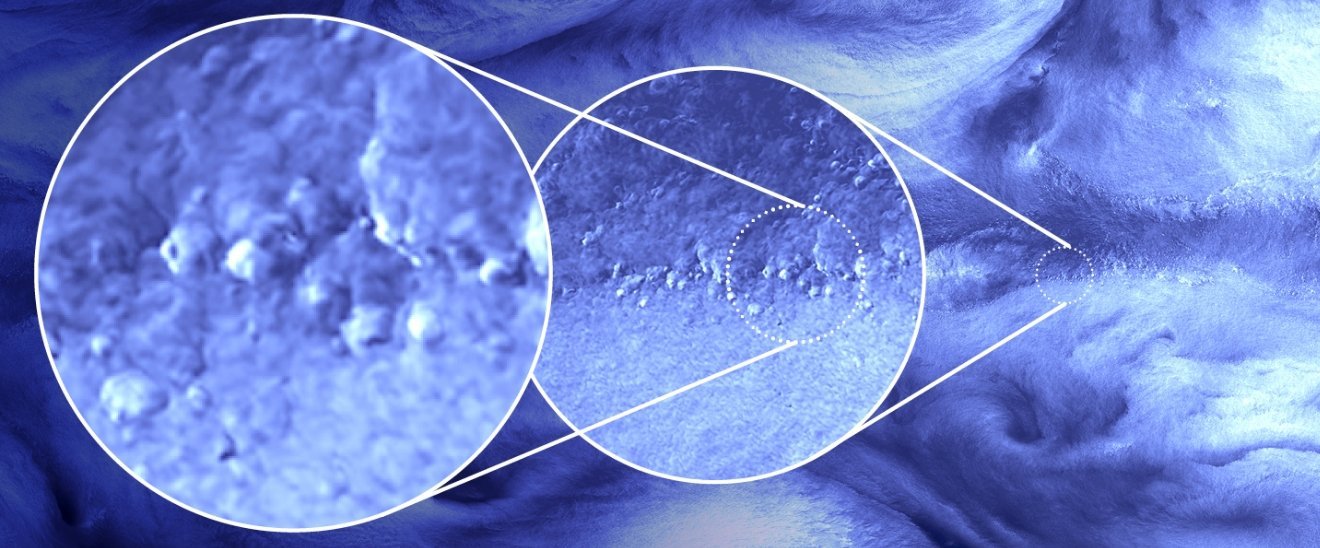

Sapphire will extend the capability first developed within the Ruby project, but in a way that explicitly represents important energy transports that earlier generation models were forced to approximate using statistical inference. While such explicit representation is beginning to be commonplace for regional climate simulations and weather forecasts, the ICON-Sapphire effort is one of only a handful worldwide, to do so to study climate globally. The experiments conducted to date, have all explored previously uncharted territory. ICON, for example, was successfully run globally with a grid spacing of 2.5 km for 40 days, the most finely resolved of the nine models that participated in the first intercomparison of global storm-resolving atmospheric models, a gem of an experiment referred to as DYAMOND in acknowledgment of the grid mesh used by NICAM, the world’s first global storm resolving model. More recently, and for the first time, several months of global 2.5 km simulations and several years of 5 km simulations have been performed with coupled models as part of the nextGEMS project.

Where the magic happens

As Daniel Klocke, head of the computational infrastructure and model development group at MPI-M emphasizes: "With the storm-resolving models, we are for the first time able to simulate essential climate processes on the basis of physical laws, rather than statistical inferences. This is important for understanding the details of climate change and its effects. This capability has required us, and our partners, to advance our software infrastructure to allow us to exploit the state of the art in computing technology.” These efforts are spawning new insights. As Klocke points out, “finer scales and the processes now resolved with the new models and computers are showing unanticipated impact on large weather systems and the general circulation. The early results are demonstrating the importance of applying these models globally, as only then do the full effects of a more physical representation of the small scales become clear.”

The Sapphire project at MPI-M is creating interest well beyond the institute, and helping to forge new cooperations through national and international projects such as nextGEMS, WarmWorld, EERIE, and DestinE. These projects are helping other institutes and scientists to exploit the potential of this new generation of models, and is helping bring their expertise to bear on challenges that arise when working at this new frontier. Developments such as Sapphire have so much promise, and at the same time are so resource intensive, that they need to bring together the best minds internationally as part of a cooperative development, of the kind that have recently been called for by leading scientists well beyond the MPI and the ICON development consortium (Slingo et al., 2022).

In the next five years, high-performance computing will be able to compute ensembles of climate simulations covering several decades and on spatial scales of 1 km ‑ on "exascale computers”, such as the JUPITER machine scheduled to become operational in Jülich in the next two years. This will allow climate science to calculate the evolution of climate change in ways that are better constrained by physical laws, and in ways that allow a better assessment of the anticipated impacts of warming.

Exploring new frontiers is as exciting as it is challenging. “Simply coping with the output of such computationally intensive models is forcing a complete rethinking of our entire workflow”, says Theresa Mieslinger, the project manager of nextGEMS and a leader in efforts to use this project and its engagement of a European community of scientists to re-imagine the scientific workflow surrounding exascale information systems such as ICON. As pointed out by Hendryk Bockelmann, head of DKRZ application support department: "For this optimal use, the software must be tailored to the computer architecture. This requires new programming techniques, and increased emphasis on separating the different concerns involved in developing high-performance codes, I/O and data systems to minimize entanglement and expose developments to the appropriate expertise.”

The challenges aren’t all technical, however. Even when models are run at km scales, potentially important processes still elude a physical description, for instance the interactions among cloud particles, the representation of the land surface, or within ice-sheets, mixing processes that occur at hectometer and finer scales, and the entirety of the biosphere. Understanding how approximate representations of these systems couple to the fine-scales represented by global storm resolving models may be key to exploiting the potential of these new technologies. As emphasized by Cathy Hohenegger, a senior scientist at MPI-M and a leader in the ICON-Sapphire development, “these challenges must be overcome if we hope to physically quantify uncertainty and increase confidence in the projections”. It is in this context that “the problems that kilometer-scale models do not solve become as interesting for our understanding as those that they do”, quips Bjorn Stevens, director at the MPI-M and leader of the Sapphire project.

Affinity to Observations

One reason that the ICON-Sapphire development team is optimistic about their ability to surmount the challenges at this new frontier of Earth system modelling is something they call “observational affinity”. In contrast to statistical inference, physical models can be more directly compared with observations. As Raphaela Vogel, Universität Hamburg, puts it: “Unlike conventional climate models, the quantities represented by, or parameters used by storm-resolving models are familiar even to people who are not modelers or scientists, often they are observable, and as a result, observations suddenly become even more useful.” A model able to resolve the local landscape is much more informative about, for example, the output from a wind turbine or storm surges in an estuary of a large river. Precipitation rates from these models are not averages over large cloud-free areas with isolated showers (as in conventional models), but are consistent with what instruments, like those maintained by the institute at its Barbados Cloud Observatory, measure. That this interplay of measured and modeled data is valuable reflected in a long tradition of downscaling ("zooming in" to even smaller scales, not only spatially and temporally, but also in terms of applications such as harvest or hydrologic models). For the application, storm-resolving models enable consistent, global downscaling.

From an observational/measurement perspective, storm-resolving models that are able to distinguish between clouds and clear skies are better suited for a consistent application of data assimilation. Likewise, remote sensing data using networks of surface, aviation, or space-based systems can be more meaningfully compared to what the model actually simulates. As an example, satellite measurements of Doppler velocities which will be made by ESA’s soon-to-be-launched Earth Explorer Satellite Earth-CARE, will be made on a scale of 10 km and thus summarize observed cloud processes similarly to how they are represented in a km model – information that, by virtue of the large mismatch in scales and processes, would be practically irrelevant to conventional climate models.

Outlook

The MPI-M is looking forward to new frontiers in Earth system modelling. The emergence of exascale computing machines, and the imperative to understand the impacts of a warming world gives urgency to their efforts. The international team coming together to develop ICON as the world’s first exascale model is excited to wrangle the monsters to be found in the uncharted territory they are beginning to explore.

Jochem Marotzke, director at MPI-M and coordinating lead author of both the fifth and sixth IPCC assessment reports, summarizes the adventure: “These efforts are central to testing our understanding of the climate system, to assessing the high-end risks of warming, and to helping humanity develop the types of information systems they need to manage our planet in a time of unprecedented change.”

Further information

Recent publications on storm-resolving models:

Slingo, J., P. Bates, P. Bauer, S. Belcher, T. Palmer, G. Stephens, B. Stevens, T. Stocker, G. Teutsch (2022) Ambitious partnership needed for reliable climate prediction. Nature Climate Change. https://doi.org/10.1038/s41558-022-01384-8

Hewitt, H., B. Fox-Kemper, B. Pearson, M. Roberts, D. Klocke (2022) The small scales of the ocean may hold the key to surprises. Nature Climate Change, https://doi.org/10.1038/s41558-022-01386-6

Publication on ICON-ESM:

Jungclaus, J.H., S.J. Lorenz, H. Schmidt, V. Brovkin, N. Brüggemann, F. Chegini, T. Crüger, P. De-Vrese, V. Gayler, M.A Giorgetta, O. Gutjahr, H. Haak, S. Hagemann, M. Hanke, T. Ilyina, P. Korn, J. Kröger, L. Linardakis, C. Mehlmann, U. Mikolajewicz, W.A. Müller, J.E.M.S Nabel, D. Notz, H. Pohlmann, D.A. Putrasahan, T. Raddatz, L. Ramme, R. Redler, C.H. Reick, T. Riddick, T. Sam, R. Schneck, R. Schnur, M. Schupfner, J.-S. von Storch, F. Wachsmann, K.-H. Wieners, F. Ziemen, B. Stevens, J. Marotzke, M. Claussen. (2022) The ICON Earth System Model version 1.0. Journal of Advances in Modeling Earth Systems, 14, e2021MS002813. https://doi.org/10.1029/2021MS002813

Publication on ICON-Sapphire:

Hohenegger, C., et al. (submitted) ICON-Sapphire: simulating the components of the Earth System and their interactions at kilometer and sub kilometer scales. Geoscientific Model Development. https://gmd.copernicus.org/preprints/gmd-2022-171/

Contact

Dr. Cathy Hohenegger

Max Planck Institute for Meteorology

Email: cathy.hohenegger@mpimet.mpg.de

Dr. Daniel Klocke

Max Planck Institute for Meteorology

Email: daniel.klocke@mpimet.mpg.de

Prof. Dr. Jochem Marotzke

Max Planck Institute for Meteorology

Email: jochem.marotzke@mpimet.mpg.de

Prof. Dr. Bjorn Stevens

Max Planck Institute for Meteorology

Email: bjorn.stevens@mpimet.mpg.de